It’s remarkable that after 2 weeks, the a9 firmware update is still making the news. Since it launched, Panasonic released new cameras and Canon buttressed their mirrorless offering with lenses. In showbiz that’s called having legs. In cameras, it’s new tech everyone is still trying to figure out. What’s driving the news cycle is Sony’s Real-Time Tracking Autofocus.

The News Cycle

The game-changing Sony’s AF tech is in the a9 firmware update. The camera calculates the focus, locking onto a subject, and the photographer can freely compose. I experienced “freeform composing” at an event in San Diego where Sony had the new firmware uploaded on demo cameras. Even before the update to add real-time tracking autofocus, I observed this about the a9

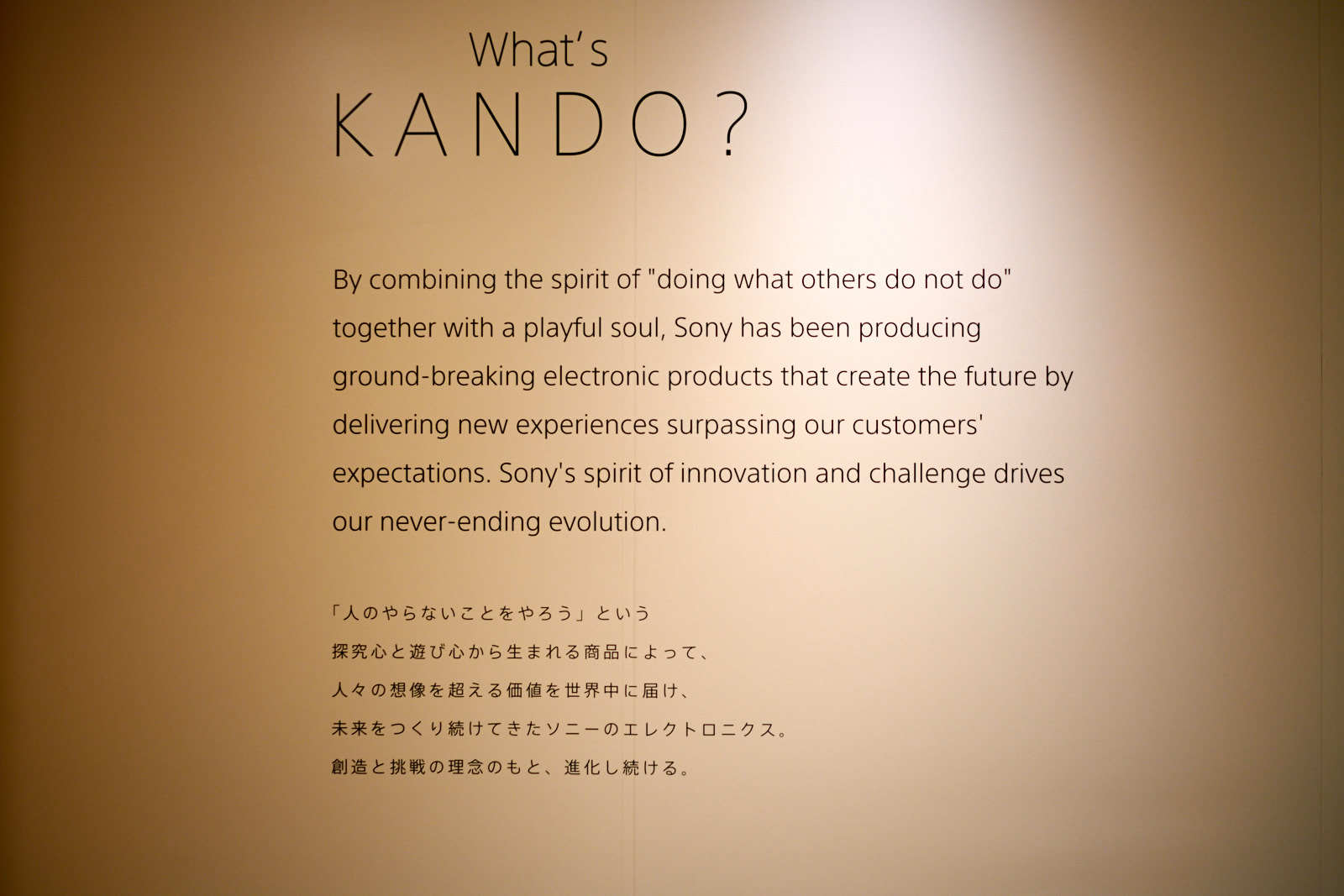

There’s nothing impeding the flow and the camera acts almost bionically with you, it’s truly being in the moment with technology and creativity. Sony calls that Kando. I’m fascinated by it.

The fascination includes shooting sports, fashion, street, astro, and landscape. Then, it was the focus speed and Eye-AF that engaged me. Now, the camera does all that and will lock and track an object you choose. In a sports venue that’s a player or the ball. The cyclists or the bike.

To that end, Sony’s tech will take some getting used to; especially, from old-school photogs who ignore every mode on a camera dial unless it’s “m” for manual.

I believe some of the reaction to Sony’s settings and confusion regarding modes is caused by the complexity of the tech and also the aesthetic behind it.

Sony wants the camera to get out of your way, so you can compose, and get the shot every time. That’s antithetical to how most photogs shoot now. I promise, once you experience and embrace it, it’ll change how you compose for the better.

Future of Photography

Here’s a simple analogy to Sony’s breakthroughs: Google search v. microfiche research. Another: Tiptronic shifting v. manual transmission.

There are those still angry that you can’t get a manual transmission in the US anymore on a new, luxury sports car. While I don’t think anyone misses microfiche, letting the camera do the hard work like an automatic is a new way of shooting.

It’s a way of shooting that I’ve embraced when the a9 launched. It’ll take a while, but other media are catching up. Larry Grace shoots air-to-air and we met at Miramar. On the first day, he was super frustrated placing the focus point in the frame. Once he understood that the camera can optionally and automagically do that, it all came together. For me too.

The best part is at that time, Larry and I were shooting without real-time tracking, just the regular tracking.

How Real-Time Tracking Autofocus Works

The a9 was already very good about locking focus on one subject even when someone or something passed between it and the camera, but it’s even more impressive now with this next-generation tracking. Here’s how the detection tree works

- Eye

- Face

- Object

And, in real time with no blackout—that’s why I used a term like dynamic composing to describe what it’s like to shoot with the a9. Once the camera locks onto a subject, you’re free to follow it, recomposing while the object moves and even if other objects get between you and it. Visual indicators show you what the camera is locked on and a white box flashes indicating the camera is capturing a frame.

Sony combined their existing Eye-AF with pattern detection, depth and color information. Real-Time Tracking Autofocus isolates an object in a scene and will follow it until the photographer lifts their finger off the AF button.

At the camera’s fastest shutter speed, it’ll capture at 24 fps.

Follow the Bouncing Ball

In this photo, the a9 locked onto a volleyball at the beach as it was volleyed back and forth over the net.

Photos from Sony San Diego 19 taken with the a9.

Photos from Sony San Diego 19 taken with the a9.Pick a Subject or an Eye

That’s a lot to take in and best to experience yourself at an event like Sony’s Be Alpha road show. To recap what Sony’s new tech does is real-time tracking on a detected subject’s eye and subject recognition.

A photographer can pick a subject, or chose an eye, it even works on animals. Watch this video with my colleagues Brian Smith and Patrick Murphy-Racey, starting at 1:08 for a visual explanation of what the camera is doing.

The a9 is the best example of, “Doing what others do not do.” That’s how a camera company creates the future for photographers.

It’s up to them to embrace it.

The new a9 system firmware update featuring version 5.0 will launch in March 2019, and version 6.0 featuring Animal Eye-AF is this Summer.

…My daily shooter is Sony A1 with a vertical grip and various Sony lenses attached like the FE 20mm F1.8. Find more gear recommendations in our shop. As an Amazon Associate I earn from qualifying purchases.